Integrate your Amazon DynamoDB table with machine learning for sentiment analysis | AWS Database Blog

Automating custom cost and usage tracking for member account owners in the AWS Migration Acceleration Program | AWS Cloud Operations & Migrations Blog

Performing Insert, update, delete and time travel on S3 data with Amazon Athena using Apache ICEBERG

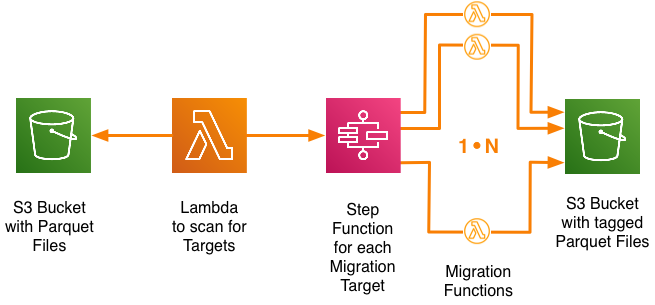

Controlled schema migration of large scale S3 Parquet data sets with Step Functions in a massively parallel manner | by Klaus Seiler | merapar | Medium

GitHub - nael-fridhi/csv-to-parquet-aws: Cloud / Data Ops mission: csv to parquet using aws s3 and lambda implemented using both golang and spark scala. Which implementation would be faster ?

Getting Started with Data Analysis on AWS using AWS Glue, Amazon Athena, and QuickSight: Part 1 | Programmatic Ponderings

Building Data Lakes in AWS with S3, Lambda, Glue, and Athena from Weather Data | The Coding Interface

Integrate your Amazon DynamoDB table with machine learning for sentiment analysis | AWS Database Blog

Simplify operational data processing in data lakes using AWS Glue and Apache Hudi | AWS Big Data Blog

Dipankar Mazumdar🥑 on X: "Fast Copy-On-Write on Apache Parquet I recently attended a talk by @UberEng on improving the speed of upserts in data lakes. This is without any table formats like

amazon web services - Why is parquet record conversion with Kinesis Datafirehose creating None column in created parquet file? - Stack Overflow

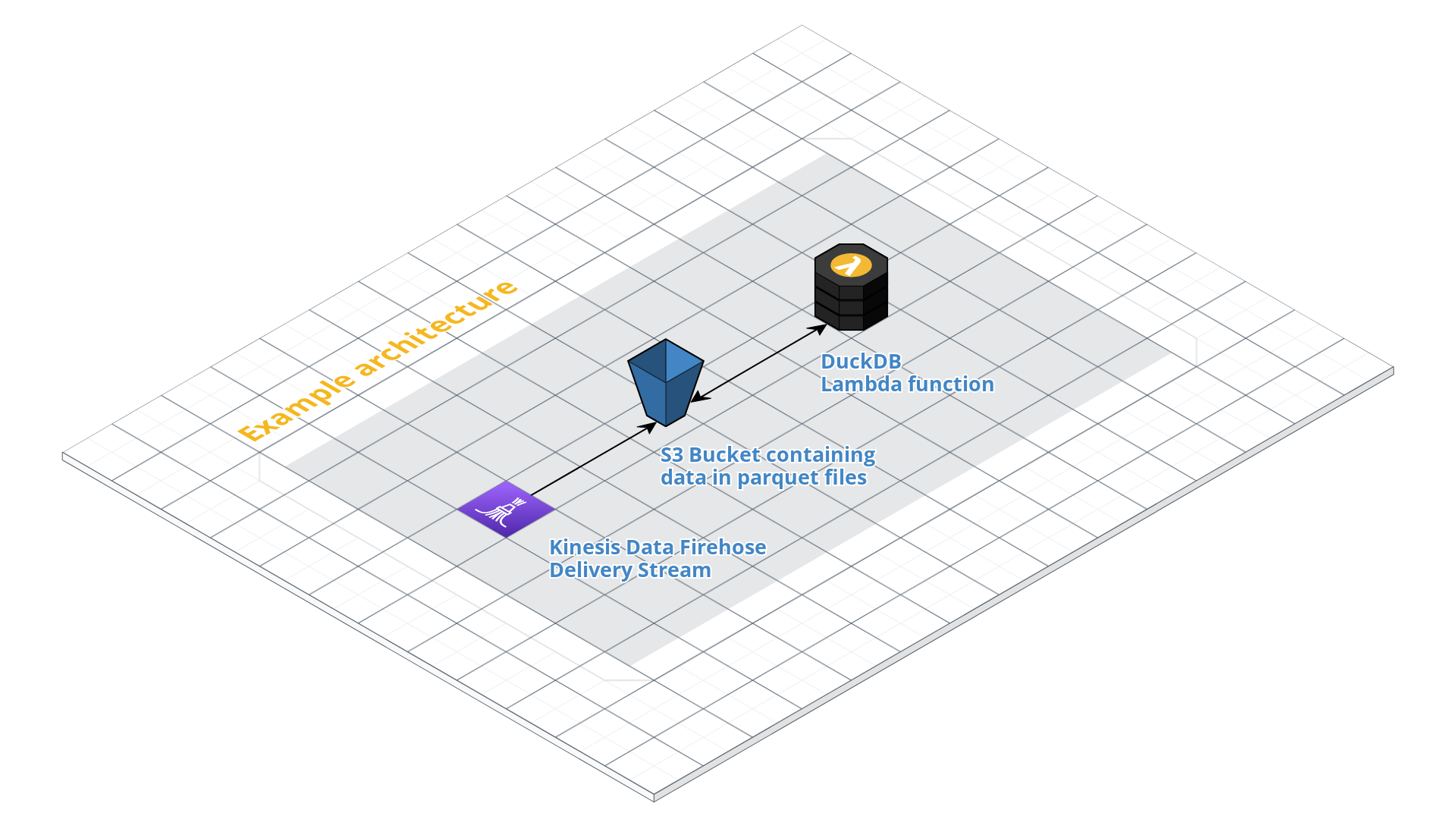

Serverless Data Engineering: How to Generate Parquet Files with AWS Lambda and Upload to S3 - YouTube